A farmer wants to increase milk production. They ask a physicist for advice. The physicist visits the farm, takes a lot of notes, draws some diagrams, then says, "OK, I need to do some calculations."

A week later, the physicist comes back and says, "I've solved the problem and I can tell you how to increase milk production".

"Great", says the farmer, "How?".

"First", says the physicist, "assume a spherical cow in a vacuum..."

What is Science?

Science is many things to many people. At times, scientists (or, at least, science enthusiasts) seem to claim that they alone know the truth of reality. Some seem to assume that "laws of science" are equivalent to laws of nature. Some go as far as stating that nature is governed by such "laws".

Some believe that only scientific facts are true and that no metaphysics are possible. While this view is less common now, it was of major importance in the formulation of quantum theory, which still has problems admitting that reality exists. As Mara Beller (1996) notes:

Strong realistic and positivistic strands are present in the writings of the founders of the quantum revolution-Bohr, Heisenberg, Pauli and Born. Militant positivistic declarations are frequently followed by fervent denial of adherence to positivism (183).

On the other hand, some see science as theory-laden and sociologically determined. Science is just one knowledge system amongst many of equal value.

However, most of us understand that scientific theories are descriptive and idealised. And this is the starting point for me.

In practising science, I had ample opportunity to witness hundreds or even thousands of objective (or observer-independent) facts about the world. The great virtue of the scientific experiment is that you get the same result, within an inherent margin of error associated with measurement, no matter who does the experiment or how many times they do it. The simplest explanation of this phenomenon is that the objective world exists and that such facts are consistent with reality. Thus, I take knowledge of such facts to constitute knowledge about reality. The usual label for this view is metaphysical realism.

However, I don't take this to be the end of the story. Realism has a major problem, identified by David Hume in the 1700s. The problem is that we cannot know reality directly; we can only know it through experience. Immanuel Kant's solution to this has been enormously influential. He argues that while reality exists, we cannot know it. In Kant's view, those qualities and quantities we take to be metaphysical—e.g. space, time, causality, etc.—actually come from our own minds. They are ideas that we impose on experience to make sense of it. This view is known as transcendental idealism. One can see how denying the possibility of metaphysics (positivism) might be seen as (one possible) extension of this view.

It's important not to confuse this view with the idea that only mind is real. This is the basic idea of metaphysical idealism. Kant believed that there is a real world, but we can never know it. In my terms, there is no epistemic privilege.

Where Kant falls down is that he lacks any obvious mechanism to account for shared experiences and intersubjectivity (the common understanding that emerges from shared experiences). We do have shared experiences. Any scenario in which large numbers of people do coordinated movements can illustrate what I mean. For example, 10,000 spectators at a tennis match turning their heads in unison to watch a ball be batted back and forth. If the ball is not objective, or observer-independent, how do the observers manage to coordinate their movements? While Kant himself argues against solipsism, his philosophy doesn't seem to consider the possibility of comparing notes on experience, which places severe limits on his idea. I've written about this in Buddhism & The Limits of Transcendental Idealism (1 April 2016).

In a pragmatic view, then, science is not about finding absolute truths or transcendental laws. Science is about idealising problems in such a way as to make a useful approximation of reality. And constantly improving such approximations. Scientists use these approximations to suggest causal explanations for phenomena. And finally, we apply the understanding gained to our lives in the form of beliefs, practices, and technologies.

What is an explanation?

In the 18th and 19th centuries, scientist confidently referred to their approximations as "laws". At the time, a mechanistic universe and transcendental laws seemed plausible. They were also gathering the low-hanging fruit, those processes which are most obviously consistent and amenable to mathematical treatment. By the 20th century, as mechanistic thinking waned, new approximations were referred to as "theories" (though legacy use of "law" continued). And more recently, under the influence of computers, the term "model" has become more prevalent.

A scientific theory provides an explanation for some aspect of reality, which allows us to understand (and thus predict) how what we observe will change over time. However, even the notion of explanation requires some unpacking.

In my essay, Does Buddhism Provide Good Explanations? (3 Feb 23), I noted Faye's (2007) typology of explanation:

- Formal-Logical Mode of Explanation: A explains B if B can be inferred from A using deduction.

- Ontological Mode of Explanation: A explains B if A is the cause of B.

- Pragmatic Mode of Explanation: a good explanation is an utterance that addresses a particular question, asked by a particular person whose rational needs (especially for understanding) must be satisfied by the answer.

Much earlier (18 Feb 2011), I outlined an argument by Thomas Lawson and Robert McCauley (1990) which distinguished explanation from interpretation.

- Explanationist: Knowledge is the discovery of causal laws, and interpretive efforts simply get in the way.

- Interpretationist: Inquiry about human life and thought occurs in irreducible frameworks of values and subjectivity.

"When people seek better interpretations they attempt to employ the categories they have in better ways. By contrast, when people seek better explanations they go beyond the rearrangement of categories; they generate new theories which will, if successful, replace or even eliminate the conceptual scheme with which they presently operate." (Lawson & McCauley 1990: 29)

The two camps are often hostile to each other, though some intermediate positions exist between them. As I noted, Lawson and McCauley see this as somewhat performative:

Interpretation presupposes a body of explanation (of facts and laws), and seeks to (re)organise empirical knowledge. Explanation always contains an element of interpretation, but successful explanations winnow and increase knowledge. The two processes are not mutually exclusive, but interrelated, and both are necessary.

This is especially true for physics where explanations often take the form of mathematical equations that don't make sense without commentary/interpretation.

Scientific explanation.

Science mainly operates, or aims to operate, in the ontological/causal mode of explanation: A explains B if (and only if) A is the cause of B. However, it still has to satisfy the conditions for being a good pragmatic explanation: "a good explanation is an utterance that addresses a particular question, asked by a particular person whose rational needs (especially for understanding) must be satisfied by the answer."

As noted in my opening anecdote, scientific models are based on idealisation, in which an intractably complex problem is idealised until it becomes tractable. For example, in kinematic problems, we often assume that the centre of mass of an object is where all the mass is. It turns out that when we treat objects as point masses in kinematics problems, the computations are much simpler and the results are sufficiently accurate and precise for most purposes.

Another commonly used idealisation is the assumption that the universe is homogeneous or isotropic at large scales. In other words, as we peer out into the farthest depths of space, we assume that matter and energy are evenly distributed. As I will show in the forthcoming essay, this assumption seems to be both theoretically and empirically false. And it seems that so-called "dark energy" is merely an artefact of this simplifying assumption.

Many theories have fallen because of employing a simplifying assumption that distorts answers to make them unsatisfying.

A "spherical cow in a vacuum" sounds funny, but a good approximation can simplify a problem just enough to make it tractable and still provide sufficient accuracy and precision for our purposes. It's not that we should never idealise a scenario or make simplifying assumptions. The fact is that we always do this. All physical theories involve starting assumptions. Rather, the argument is pragmatic. The extent to which we idealise problems is determined by the ability of the model to explain phenomena to the level of accuracy and precision that our questions require.

For example, if our question is, "How do we get a satellite into orbit around the moon?" we have a classic "three-body" problem (with four bodies: Earth, moon, sun, and satellite). Such problems are mathematically very difficult to solve. So we have to idealise and simplify the problem. For example, we can decide to ignore the gravitational attraction caused by the satellite, which is real but tiny. We can assume that space is relatively flat throughout. We can note that relativistic effects are also real but tiny. We don't have to slavishly use the most complex explanation for everything. Given our starting assumptions, we can just use Newton's law of gravitation to calculate orbits.

We got to relativity precisely because someone asked a question that Newtonian approaches could not explain, i.e. why does the orbit of Mercury precess and at what rate? In the Newton approximation, the orbit doesn't precess. But in Einstein's reformulation of gravity as the geometry of spacetime, a precession is expected and can be calculated.

Models

I was in a physical chemistry class in 1986 when I realised that what I had been learning through school and university was a series of increasingly sophisticated models, and the latest model (quantum physics) was still a model. At no point did we get to reality. There did seem to me to be a reality beyond the models, but it seemed to be forever out of reach. I had next to no knowledge of philosophy at that point, so I struggled to articulate this thought, and I found it dispiriting. In writing this essay, I am completing a circle that I began as a naive 20-year-old student.

This intuition about science crystallised into the idea that no one has epistemic privilege. By this I mean that no one—gurus and scientists included—has privileged access to reality. Reality is inaccessible to everyone. No one knows the nature of reality or the extent of it.

We all accumulate data via the same array of physical senses. That data feeds virtual models of world and self created by the brain. Those models both feed information to our first-person perspective, using the sensory apparatus of the brain to present images to our mind's eye. This means that what we "see" is at least two steps removed from reality. This limit applies to everyone, all the time.

However, when we compare notes on our experience, it's clear that some aspects of experience are independent of any individual observer (objective) and some of them are particular to individual observers (subjective). By focusing on and comparing notes about the objective aspects of experience, we can make reliable inferences about how the world works. This is what rescues metaphysics from positivism on one hand and superstition on the other.

We can all make inferences from sense data. And we are able to make inferences that prove to be reliable guides to navigating the world and allow us to make satisfying causal explanations of phenomena. Science is an extension of this capacity, with added concern for accuracy, precision, and measurement error.

Since reality is the same for everyone, valid models of reality should point in the same direction. Perhaps different approaches will highlight different aspects of reality, but we will be able to see how those aspects are related. This is generally the case for science. A theory about one aspect of reality has to be consistent, even compliant, with all the other aspects. Or if one theory is stubbornly out of sync, then that theory has to change, or all of science has to change. Famously, Einstein discovered several ways in which science had to change. For example, Einstein proved that time is particular rather than universal. Every point in space has its own time. And this led to a general reconsideration of the role of time in our models and explanations.

Sources of Error

A scientific measurement is always accompanied by an estimate of the error inherent in the measurement apparatus and procedure. Which gives us a nice heuristic: If a measurement you are looking at is not accompanied by an indication of the errors, then the measurement is either not scientific, or it has been decontextualised and, with the loss of this information, has been rendered effectively unscientific.

Part of every good scientific experiment is identifying sources of error and trying to eliminate or minimise them. For example, if I measure my height with three different rulers, will they all give the same answer? Perhaps I slumped a little on the second measurement? Perhaps the factory glitched, and one of the rulers is faulty?

In practice, a measurement is accurate to some degree, precise to some degree, and contains inherent measurement error to some degree. And each degree should be specified to the extent that it is known.

Accuracy is itself a measurement, and as a quantity reflects how close to reality the measurement is.

Precision represents how finely we are making distinctions in quantity.

Measurement error reflects uncertainty introduced into the measurement process by the apparatus and the procedure.

Now, precision is relatively easy to know and control. We often use the heuristic that a ruler is accurate to half the smallest measure. So a ruler marked with millimetres is considered precise to 0.5 mm.

Let's I want to measure my tea cup. I have three different rulers. But I also note that the cup has rounded edges, so knowing where to measure from is a judgment call. I estimate that this will add a further 1 mm of error. Here are my results:

- 83.5 ± 1.5 mm.

- 86.0 ± 1.5 mm.

- 84.5 ± 1.5 mm

The average is 84.6 ± 1.5 mm. So we would say that we think the true answer lies between 86.1 and 83.1 mm. And note that even though I have an outlier (86.0 mm), this is in fact within the margin of error.

As I was measuring, I noted another potential source of error. I was guesstimating where the widest point was. And I think this probably adds another 1-2 mm of measurement error. When considering sources of error in a measurement, one's measurement procedure is often a source. In science, clearly stating one's procedure allows others to notice problems the scientists might have overlooked. Here, I might have decided to mark the cup so that I measured at the same point each time.

Now the trick is that there is no way to get behind the measurement and check with reality. So, accuracy has to be defined pragmatically as well. One way is to rely on statistics. For example, one makes many measurements and presents the mean value and the standard deviation (which requires more than three measurements).

The point is that error is always possible. It always has to be accounted for, preferably in advance. We can take steps to eliminate error. An approximation always relies on starting assumptions, and these are also a source of error. Keep in mind that this critique comes from scientists themselves. They haven't been blindly ignoring error all these years.

Mathematical Models

I'm not going to dwell on this too much. But in science, our explanations and models usually take the form of an abstract symbolic mathematical equation. A simple, one-dimensional wave equation takes the general form:

y = f(x,t)

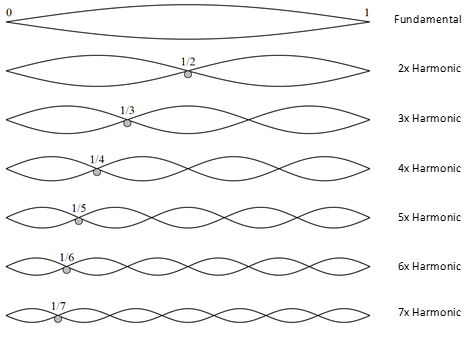

That is to say that the displacement of the wave (y) is a function of position (x) and time (t). Which is to say that changes in the displacement are proportional to changes in position in space and time. This describes a wave that, over time, moves in the x direction (left-right) and displaces in the y direction (up-down).

More specifically, we model simple harmonic oscillations using the sine function. In this case, we know that spatial changes are a function of position and temporal changes are a function of time.

y(x) = sin(x)

y(t) = sin(t)

It turns out that the relationship between the two functions can be expressed as

If the wave is moving right, we subtract time, and if the wave is moving to the left, we add it.

The sine function smoothly changes between +1 and -1, but a real wave has an amplitude, and we can scale the function by multiplying it by the amplitude.

And so on. We keep refining the model until we get to the general formula:

y(x,t) = A sin(kx ± ωt ± ϕ).

Where A is the maximum amplitude, k is the stiffness of the waving medium, ω is the angular velocity, and ϕ is the phase.

The displacement is periodic in both space and time. Since k = 2π/λ (where λ is the wavelength), the function returns to the same spatial configuration when x/n = λ (where n is a whole number). Similarly, since ω = 2π/T (where T is the period or wavetime), the function returns to the same temporal configuration when t/n = T.

What distinguishes physics from pure maths is that, in physics, each term in an equation has a physical significance or interpretation. The maths aims to represent changes in our system over time and space.

Of course, this is idealised. It's one-dimensional. Each oscillation is identical to the last. The model has no friction. If I add a term for friction, it will only be an approximation of what friction does. But no matter how many terms I add, the model is still a model. It's still an idealisation of the problem. And the answers it gives are still approximations.

Conclusion

No one has epistemic privilege. This means that all metaphysical views are speculative. However, we need not capitulate to solipsism (we can only rely on our own judgements), relativism (all knowledge has equal value) or positivism (no metaphysics is possible).

Because, in some cases, we are speculating based on comparing notes about empirical data. This allows us to pragmatically define metaphysical terms like reality, space, time, and causality in such a way that our explanations provide us with reliable knowledge. That is to say, knowledge we can apply and get expected results. Every day I wake up and the physical parameters of the universe are the same, even if everything I see is different.

Reality is the world of observer-independent phenomena. No matter who is looking, when we compare notes, we broadly agree on what we saw. There is no reason to infer that reality is perfect, absolute, or magical. It's not the case that somewhere out in the unknown, all of our problems will be solved. As a historian of religion, I recognise the urge to utopian thinking and I reject it.

Rather, reality is seen to be consistent across observations and over time. Note that I say "consistent", not "the same". Reality is clearly changing all the time. But the changes we perceive follow patterns. And the patterns are consistent enough to be comprehensible.

The motions of stars and planets are comprehensible: we can form explanations for these that satisfactorily answer the questions people ask. The patterns of weather are comprehensible even when unpredictable. People, on the other hand, remain incomprehensible to me.

That said, all answers to scientific questions are approximations, based on idealisations and assumptions. Which is fine if we make clear how we have idealised a situation and what assumptions we have made. This allows other people to critique our ideas and practices. As Mercier and Sperber point out, it's only in critique that humans actually use reasoning (An Argumentative Theory of Reason,10 May 2013).

We can approximate reality, but we should not attempt to appropriate it by insisting that our approximations are reality. Our theories and mathematics are always the map, never the territory. The phenomenon may be real, but the maths never is.

This means that if our theory doesn't fit reality (or the data), we should not change reality (or the data); we should change the theory. No mathematical approximation is so good that it demands that we redefine reality. Hence, all of the quantum Ψ-ontologies are bogus. The quantum wavefunction is a highly abstract concept; it is not real. For a deeper dive into this topic, see Chang (1997), which requires a working knowledge of how the quantum formalism works, but makes some extremely cogent points about idealised measurements.

In agreeing that the scientific method and scientific explanations have limits, I do not mean to dismiss them. Science is by far the most successful knowledge seeking enterprise in history. Science provides satisfactory answers many questions. For better or worse, science has transformed our lives (and the lives of every living thing on the planet).

No, we don't get the kinds of answers that religion has long promised humanity. There is no certainty, we will never know the nature of reality, we still die, and so on. But then religion never had any good answers to these questions either.

~~Φ~~

Beller, Mara. (1996). "The Rhetoric of Antirealism and the Copenhagen Spirit". Philosophy of Science 63(2): 183-204.

Chang, Hasok. (1997). "On the Applicability of the Quantum Measurement Formalism." Erkenntnis 46(2): 143-163. https://www.jstor.org/stable/20012757

Faye, Jan.(2007). "The Pragmatic-Rhetorical Theory of Explanation." In Rethinking Explanation. Boston Studies in the Philosophy of Science, 43-68. Edited by J. Persson and P. Yikoski. Dordrecht: Springer.

Lawson, E. T. and McCauley, R. N. (1990). Rethinking Religion: Connecting Cognition and Culture. Cambridge: Cambridge University Press.

Note: 14/6/25. The maths is deterministic, but does this mean that reality is deterministic?