“The story of human origins has never really been about the past. Pre-history is about the present day. It always has been.” --Stefanos Geroulanos

Earlier in the year, I addressed some methodological issues in Buddhist Studies in three blog posts:

- History as Practiced by Philologists: A Response to Levman's Response to Drewes.

- Notes on Finding Buddhists in Global History.

- Early Buddhism as an Orientalist Construct.

Human beings have always had a deep and abiding fascination with origins. For millennia humans have told stories about the origins of the universe, the origins of life, and the origins of humans. More recently we have sought the origins of tool use, consciousness, language, writing, religion, race, and inequality.

The idea is that if the past illuminates the present, then origins are the ultimate source of such illumination. If we can just understand the origins of a phenomenon, we can understand everything about it: because the origin is the source. When we say that scientists are exploring the origins of the universe, the origins of life, or the origins of consciousness, we mean that they seek the source of the universe, life, and consciousness. We seek to understand, at the most fundamental level, what sustains the universe, life, and consciousness, because we desperately want them to continue. But we also want to have more leverage to ensure our own flourishing. We want to be able to predict and thus avoid misfortune.

The discovery of origins is not always as illuminating as we might hope. In this essay, I'm going to explore some scenarios in which a successful quest to discover the origins does not lead to illumination of the present, let alone to the source of illumination. I will show that, at least to some extent, our quest for origins is misguided. As a general approach, it is flawed. There are many situations where knowing the origin does not provide us with accurate information about the present.

The Etymological Fallacy

Perhaps the most obvious place to start is with the etymological fallacy. Presented with an unfamiliar word we adopt various strategies to discover what it means. One of these strategies is to enquire into how the word was used in the past. The word etymology is itself ancient and still used in that old sense: from the Greek etymon "true sense, original meaning" and logia "speaking of".

The idea here is that if we can only trace the word back to its origins then we get the "original" meaning and this will explain how to use the word in the present. The problem here is that etymology, or better historical phonology, is not a tool developed to answer this question of what a word originally meant in one language. Rather it developed to discover the common grammatical and lexical roots of two or more different languages.

This approach was inaugurated in European culture by William Jones (ca 1786 CE), who noticed structural similarities between Sanskrit, Old Persian, Latin, and Greek. The similarities were of such a type that the languages in question had to spring from a common source. And if there was a common language, then there must have been a culture in which the language was spoken. This project led to the plausible reconstruction of Proto-Indo-European, an entirely unattested language last spoken some 3000 years ago. It was soon linked to archaeology so that we could conjecture where and when the PIE-speakers lived and to some extent how they lived. This is one of the great successes of philology.

An example that illustrates the limits of etymology for explaining the present is the word "nice". This word is presently used as a weak or banal compliment or, equally often, as a sarcastic compliment. "It's nice to see you", "That's nice, Dear...", "Nice hat!". This sense arose out of the 19th century meaning "kind, thoughtful" which was intended without sarcasm or irony. This in turn grew out of the 18th century meaning "agreeable, delightful". In the 16th century, nice meant "precise, careful". This in turn developed from the 15th century usage "dainty, delicate". In the 14th century nice meant "fussy, fastidious", which all goes back to the 13th century when the word came into English from French where it meant "careless, clumsy; weak; poor, needy; simple, stupid, silly, foolish,".

In fact, we can trace this word all the way back to Proto-Indo-European. It combines an affix ne "not" with the root scire "to know". In the oldest sense that we can derive, nice means "ignorant". In fact, we can see "nice" as a contraction of the word nescient.

So now we can ask the question: Having identified that the original meaning of "nice" was "nescient", what does this tell us about the present use?

In this case, it's no help because the meaning has changed so often, so much, and in such unexpected ways. In 2024, the way we use "nice" is entirely unconnected to "nescient". While "nice" is an extreme example, all words change their meaning and their pronunciation over time. There is a kind of evolution by mutation and natural selection going on all the time.

Etymology as a method comes under the heading of semantics, which is broadly concerned with what words mean. Semantics is a subfield of semiotics, the study of meaning generally. There is another approach to thinking about words which is pragmatics. In a pragmatics approach, rather than inquiring into (abstract) meaning, we ask "What does a word or phrase do?". A classic expression of this is found in Ludwig Wittgenstein's Philosophical Investigations:

“For a large class of cases—though not for all—in which we employ the word “meaning” it can be defined thus: the meaning of a word is its use in the language.” (1967 Philosophical Investigations. Section 43)

This is often abbreviated to "meaning is use", which is a good rule of thumb. The meaning of words is not intrinsic to the words but rather emerges from how a language-using community understands the words. Virtually all words change their meaning over time, though some kinds of words, such as kin terms and numbers, tend to be conserved more than others.

John "J. L." Austin and his one-time student, John Searle, developed the idea of speech acts, which focus on how we do things with speech. An example might be "I now pronounce you man and wife". The words have meaning, but the statement is intended to mark the change in status of two single people into one married couple. This is a change in legal status affecting, for example, how much tax they pay. Austin and Searle looked into how merely saying some words can result in a change of legal status. Searle's book The Construction of Social Reality is a brilliant exploration of this problem.

Austin and Searle classified speech acts according to locution (what was said), illocution (what a speech act was intended to do), and perlocution (what was understood or done).

If we take this approach, the idea of origins has no great appeal unless, for example, we need to know how a word was used in a historical document. The way the word was used in the past is only relevant to the past. The way that a word is used in the present, is what it means in the present.

Let's take the example of "literally" being used as an intensifier: "I literally died" (said for example by someone living, who got a fright).

The process of words being used as intensifiers in this way is a constant in English. There are dozens of examples across time (including "nice"). And yet this one word has become a particular bugbear for language pedants. "Literally", means, according to the Online Etymological Dictionary "according to the exact meaning of the word." But OEtD also notes that the hyperbolic use of literally, to mean "in the strongest admissible sense" was first recorded in the late 17th century. Using "literally" as an intensifier is not a new usage, complaining about it is new.

Complaining about language use is very much an elitist pastime, in which parts of the British Empire continue to assert their sense of entitlement and superiority by criticising the vernacular speech differs from the speech of the historical aristoi. The British ruling classes despise the plebian hoi polloi. One way to align oneself with the elite, and hope to curry favour, is to mount a defence of the way the elite speak. In Britain, this goes back to 1066 and a land of people speaking Old English being ruled by French-speaking aristoi from Normandy.

Cases like "nice" or "literally" show that what a word previously meant is irrelevant if people just agree to start using it to mean something different. Intensifiers are subject to fashions and thus change more rapidly than other words. Look up the etymology of, for example, "very" or "terrific".

In Buddhist studies, we find numerous examples of the etymological fallacy because ancient Buddhists very often used words in new ways that were unrelated to the previous use. If you look at Buddhists writing about a word like vedanā, you will see them carefully explain that the word derives from the root √vid "to know (via experience)" and then proceed, without irony, to explain that the word is never used in that sense, but it was used to indicate the positive and negative hedonic qualities of sensory experience (sukha, duḥkha, asukkhamaduḥkha). Since there was no word that meant this, they adapted one to their purposes. No amount of etymologising or semantic analysis will reveal the Buddhist usage, because it's based on pragmatic considerations.

Similarly when they try to translate vedanā into English, typically translators choose either "feelings" or "sensations". Neither of these words conveys "the positive and negative hedonic qualities of sensory experience" or anything like it. Moreover, no one really likes either translation and good arguments have been put forward against using both of them. However, in the end, there is no common English word that denotes "the positive and negative hedonic qualities of sensory experience". This concept is not part of our worldview, with one important exception. Neuroscientists have a very similar concept of experience having positive and negative hedonic qualities, and they have adapted the word valence to convey this. Valence means "strength, or capacity" (cognate with English wield). Again, this is pragmatic rather than semantic.

Staying in the realms of philology, we come to the idea of the original text or urtext.

The Urtext fallacy

As the European Crusaders crashed about murdering Muslims and looting the Middle East they occasionally stumbled on hand-copied bibles from the Syriac or Ethiopic Churches. These texts were recognisably Christian Bibles, but they contained obvious and significant differences from European Bibles. Since the Bible was considered the literal(!) word of God, that two Bibles might have any differences, let alone major differences, was intolerable (and almost inconceivable).

For theologians, it was deeply troubling to discover that some Bibles told different stories, or at least the same stories in different ways. Theologians were concerned that the perfect words of god had been adulterated and they could not be entirely sure that the Bible they had was the right one.

Drawing on methods of interpreting legal documents, theologians began to develop methods for recovering the "original" Bible. Underpinning this exercise was an assumption that we find prominently enunciated in the Bible itself, viz:

In the beginning was the Word, and the Word was with God, and the Word was God. (John 1:1)

Later, the methods used to reconstruct the words of god were codified and systematised by Karl Lachmann (1793-1851). Although there were competing systems and a lot of criticism, Lachmann is the one we remember (which itself may reflect the "great men of history" fallacy.

The method begins with the assumption that there was once a perfect original. Also known as an urtext, so called because in German ur means "original, earliest, primitive". And Germans were very influential in the early days of philology. The brothers Grimm and Humbolt come to mind for example.

As manuscripts are repeatedly copied by scribes, errors and amendments may creep in and accumulate. By carefully comparing witnesses using Lachmann's method, the textual critic may restore the (perfect) "original text" by eliminating all the errors, even though none of the surviving documents on their own reflects the pure original any longer.

This unspoken assumption of original perfection is also apposite to the practice of philology on Buddhist literature. God did not make errors. Neither, apparently, did the Buddha. So any and all errors must be adventitious and can be safely eliminated. This leads to some oddities, however.

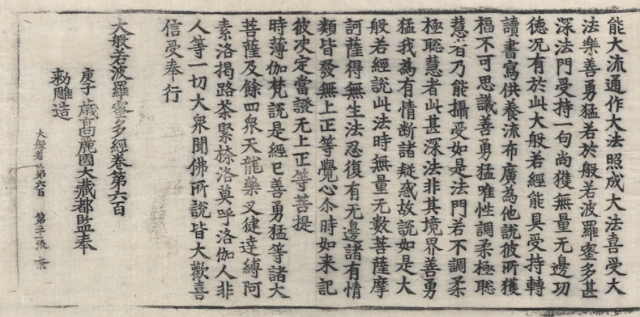

For example, more or less every Sanskrit Prajñāpāramitā manuscript spells bodhisattva as bodhisatva and ārya as āryya. In almost every case, editors have tacitly "corrected" this to conform to the classical Sanskrit spelling. However, if a scribe misspells a word consistently throughout, and if all of that scribe's contemporaries also misspell the word in the same way; this is not a "mistake" to be corrected. Rather, it's a distinct orthography that is characteristic of the literature and should be preserved. Some scholars have adopted the Buddhist spelling bodhisatva in recognition of this.

Why do scholars adopt this practice of privileging classical Sanskrit? One answer might be that Sanskrit is supposedly the original language of the Indo-Aryans, that is to say, the Vedic-speakers or Vaidikas who migrated into the Punjab from Afghanistan, ca 1500 BCE. Sanskrit refers to itself as the saṃskṛtabhāṣya "perfected speech". Treating Buddhists as idealised Sanskrit speakers is irrational.

The idea of a perfect Buddhist ur-text is also incoherent. Buddhists often wrote as they spoke, in a vernacular Middle-Indic language, with more or less cultural influence from Sanskrit.

Moreover, while scribal error was a factor in changing Buddhist texts, the active intervention of Buddhists making deliberate changes was a much greater factor. Any given story usually appears in several variants. Most of the suttas contain narrative elements drawn from a common pool and put together in novel ways. Few suttas are entirely "original".

Moreover, long after the putative death of the Buddha, Buddhists continued to both redact their existing texts and to compose new texts attributed to the Buddha. Is this attribution to the Buddha a new thing or is it a continuation of standard practice? We don't know because the "fossil record" of texts is incomplete and there is an epistemic horizon because writing was first used in India in the mid-3rd century BCE. Prior to this, we don't know what Buddhist texts looked like. Some Buddhist Studies scholars who want Buddhism to be old assume strong continuity going back in time. This gives them the idea that, for example, Pāli suttas are an authentic and accurate record of Buddhist thought going back centuries before the advent of writing. In this view, nothing changes over time; or things only change in insignificant ways.

Frankly, this position is weird for the religion that preaches that "everything changes". And for a religion, whose study is complicated by the fact that, while scripture was canonised in Sri Lanka, it never was in India. The fact is that Buddhist sutras underwent constant revision and renewal in India. We also know that Buddhism emerged along with the rise of the second urbanisation of India, a few centuries of extraordinary social and political change across Northern India. The idea that prior to writing, nothing much changed is clearly nonsensical.

In Buddhist Studies, there is no hope of using the method of eliminating errors to reveal the pure original words of the Buddha. No Buddhist urtexts are waiting to be revealed. Rather such texts were clearly constructed piecemeal, more often than not using pericopes or stock phrases that are repeated across the canon: like evaṃ mayā śrutaṃ. And they continued to be deliberately changed even after being written down.

The literature we have is the product of centuries of oral storytelling that was not concerned with preserving "the original" but with communicating Buddhist values to each new generation on its own terms. This was followed by centuries of written transmission which involved editing, redacting, and other ways of introducing variation that are not "errors" to be removed.

The Evolutionary Tree Fallacy

One of the most powerful scientific models we have is Darwin's evolutionary tree, famously first sketched in a notebook in 1837.

This developed into a model in which new species branch off from existing species. A modern example looks like this:

However, this is not how evolution proceeds at all, especially in the Bacteria/Archaea kingdoms. This linear-branching tree obscures more than it reveals.

1. All species live in ecosystems and cannot be (fully) understood apart from that ecosystem. If you isolate any living thing from its ecosystem it typically dies soon afterwards. We can supply an ersatz environment that keeps them alive but, for example, many zoo animals have severe behavioural problems associated with living in captivity. The more intelligent the animal, the more they suffer from captivity.

2. Almost all species live in some kind of symbiosis. All animals, for example, have a microbiome on the skin and lining the gut consisting mainly of bacteria and fungi. These organisms act as an interface between us and the world and contribute in many different ways to our well-being (not least of which is helping us digest plants). Plants have mycorrhizal fungi that penetrate both roots and soil, connecting the two. Claims that plants use this fungal network as a kind of "internet" seem to have been greatly overstated, but the symbiosis is real and vitally important. The macrobiome cannot do without its microbiome.

3. Hybridisation of species is far more common than classical taxonomies allow. It is very common in organisms that use external fertilisation such as fish and plants. It is the norm amongst bacteria and archaea since both support extra-genomic (free-floating) genes and have the ability to share genes with any other bacteria. It is questionable whether the term "species" applies to them at all.

4. Virtually all life is communal, cooperative, and mutually interdependent. Yes, there is competition as well, and sometimes this results in the death of the loser, but this is secondary. All bacteria definitely live in communities, usually with multiple species contributing to the persistence of the whole. Social animals routinely outstrip their more solitary cousins. The collective aspect of life defies the paradigmatic reductionist approach to science. We can see Dawkins' "selfish gene" as an application of neoliberal individualism and selfishness applied to biology.

The quest for origins is fundamentally a reductive approach to knowledge: present-day complexity is reduced to a single simple starting point. But life is irreducible. There is more to be gained from studying a living organism in its ecosystem than from killing and dissecting it.

While the tree metaphor makes evolution seem simple, it's not. A better model is the braided stream, which splits into multiple streams but also recombines.

This is more like what evolution looks like in reality. Especially if you think of the river as just one manifestation of the water cycle. The origins of a river are not simplistic. Despite the Victorian fascination with discovering "the source of the Nile" and so on, the truth is we can never point to one spot and say, this is the origin of a river. Rather the origins of rivers are to be found in the water cycle.

Surface water evaporates and plants transpirate, and wind transports water-laden air, which falls as rain on oceans and land. On land, rain forms rivers and lakes and so on, only to evaporate all over again. Where is the origin? It is nowhere.

Nor does the water cycle happen in isolation. It depends on other physical, often cyclic, processes, including numerous oceanographic, atmospheric, and geological processes.

Everything is interconnected. The origins of species may not be accessible to reductionist approaches since evolution is not linear and nor are most ecosystems. This brings us to the topic of emergence.

Emergence

Let us say that we wanted to understand the properties of table salt: cubic white crystals of sodium chlorine. The reductionist approach is to break the substance into its constituents, in this case, we might electrolyse molten sodium chloride to get sodium metal and chlorine gas. Sodium is a soft grey metal that reacts violently with water to produce hydrogen gas and enough heat to spontaneously combust the hydrogen. Chlorine is a pale green gas that reacts with water to form hydrochloric acid, which is what our stomach uses to digest food. If inhaled or in contact with the eyes, chlorine can cause severe acid burns. (I have personal experience of both elements).

What have we learned about table salt? On this model, we expect sodium chloride to be a grey-green material that is wildly explosive and corrosive. But of course, this is not what sodium chloride is like. The reason is that in changing from elemental forms to compounds we add something that is not a new substance. That something is structure. When we add structure to sodium and chlorine we get something new that has its own properties. While there are many different definitions of emergent property, my definition derives from the book Analysis and the Fullness of Reality by Richard H. Jones. Here it is:

An emergent property is a property that derives from structure rather than substance.

I treat "system" and "structure" as the dynamic and static elements of the same concept. Reductionism is concerned with building blocks. Antireductionism (aka holism, or emergentism) is concerned with how arranging those building blocks into static structures and/or dynamic systems, allows new properties to emerge that are largely dependent on how the building blocks are arranged.

Think of a ship. A ship has to float. But the fact that it floats has less to do with the substance we make the ship out of and more to do with the structure. Large ships are made from steel which is roughly eight times denser than water. But by arranging it so that the overall volume enclosed by the steel is eight times larger than a solid steel ingot, we can make the density of the hull small enough to float. Floating in water is not a fundamental property of steel. It's an emergent property of hull-shaped objects made of virtually any material no matter how dense they are naturally. One could even imagine a boat made of neutronium (which has a density of has a density of almost 400 quadrillion kg/m3, compared to water at 1000 kg/m3).

Biologists, being concerned with complex interacting systems and structures, tend to understand the limits of reductionism very well. One can only learn so much from dissecting an organism. Understanding it requires that we study living examples, in their communities, and within their ecosystems. This means that to understand an organism we have to understand how it interacts with the systems and structures that it is embedded in.

However, the philosophy and practice of science is generally dominated by reductionists. So the way we understand science as a practice is distorted in favour of substances, reductive methods, and reductive explanations. And as a result many people believe that if we can only discover and explain the most basic elements of the universe, we will understand everything. The biologist knows this is not true.

This idea ran onto the rocks almost 100 years ago with the development of quantum theory. Quantum theory operates like a black box. If we plug numbers in, we get out predictions in the form of probabilities, and those predicted probabilities are very consistent with observations. The problem is that no one knows why the Schrodinger equation works or to what physical reality it corresponds. Many physicists take an insouciant attitude to this, arguing that since the equations work, there is no mileage in asking why (an attitude more like the medieval Roman Catholic Church than that of Galileo Galilei).

This disconnect has only been made worse by experimental proof that certain quantum phenomena are non-local - i.e. not constrained by space and time in the way that we expect from classical physics. Locality is explicit and implicit in how we think about the world. Some of the most basic information we get about the world, for example, is our location, orientation, and extension in space. If this is not relevant on the smallest scale then it seems unlikely that we will ever understand that scale in any conventional sense.

I think Kant was correct to argue that, as far as human minds are concerned, location and extension are baked into how we think about the world. Without them, we are literally and figuratively lost. And it has been experimentally proved that locality is not fundamental. One way around this is to posit that locality is a function of the degree of entanglement between microscopic regions of space-time, i.e. that locality itself is an emergent property of a more fundamental property. Whether this bears fruit only time will tell. At this point, however, it appears unlikely that studying the origins of matter will solve this problem.

The "Original Buddhism" Fallacy

A constant theme of discussion in Buddhist texts is authenticity and the threat posed by inauthenticity. This continues to be an important theme for Buddhists. At its most banal, authentic means "our style of Buddhism" and inauthentic means "any other style of Buddhism". At the other end of the scale, we might say that authentic means "leads to liberation" in practice (as opposed to in theory). And inauthentic does not lead to liberation. Between these two extremes are a range of views, often based on conformity with some template such as certain old texts.

A common theme for Buddhists seeking to legitimise their practice of Buddhism is to cite some authority that suggests that this or that practice is prominent in older Buddhist literature (though the dating of Buddhist texts by non-historians is quite nutty). This way of thinking has led to many formulations that purport to represent "original Buddhism", "pre-sectarian Buddhism", or "Buddhism before Buddhism". The general process is that employing a variety of heuristics, one selects certain texts, ideas, or practices (excluding all others) and from them, one makes an argument that this is how all Buddhists went about things in the past, or that this is what the Buddha did (when he was "all Buddhists").

The idea, for example, that the Pāli suttas represent "the actual words of the Buddha" (buddhavacana) holds powerful sway over some Buddhists. A ragtag group of scholars have pursued projects like identifying the language the Buddha spoke. On the other hand, other scholars have pointed out that the very notion of a "historical Buddha" is incoherent because of the absence of any primary sources (i.e. contemporary, written, eyewitness accounts).

Given the ubiquitous appeal of these "origin" ideas, it is strange that so few Buddhists will admit that all the Buddhist traditions for which we have reliable documentation, abandoned so-called buddhavacana in favour of new ideas and practices. All of them, including the Theravādins (who seem to make the most noise about "authenticity").

It is perhaps the single most striking fact about the Buddhist religion that everyone claims to be following "the teachings of Buddha" when in point of fact, none of them does. Rather they all follow the teachings of later interpreters and reformers. All modern forms of Buddhism are just that: modern. This is not problematic per se; just in obvious conflict with the idea that any modern person is engaged in the practice of original or early Buddhism.

The closest we get to an admission of this is the anachronistic "back to Buddha" groups, including many Theravādins (and ex-Theravādins) who seek to reconstruct "original Buddhism" by selecting (and excluding) extracts from the Pāli suttas. In point of fact, those who take this approach invariably invent a new form of Buddhism that has never existed before. This is inevitable because we don't live in Iron Age India and we do have conditioning from modernity.

David McMahan famously identified three powerful modern influences on what he called "Buddhist Modernism" a term he chose to deliberately suggest that living Buddhism owes more to modernity—especially Romanticism, Protestantism, and Scientific Rationalism—than it does to Buddhism. It's a pity that McMahan chose not to address the political dimension in which Liberalism is also a major influence on Buddhist Modernism. However, the basic approach is sound. Yes, we are Buddhists, but we are also modernists. McMahan's view that modernism is the dominant aspect is controversial but not necessarily wrong. It's certainly food for thought.

One of the main methods for seeking original Buddhism is one I noted above. Exegetes assume that texts have an objectively factual core, which has been overlain with adventitious elements including "superstition". The idea is that if we just subtract the superstition we arrive at historical facts.

Sadly, this method is incoherent if only because it's clear that the authors of the Pāli suttas themselves did not make such a distinction. To the authors, it was all buddhavacana, including all the miracles, superstitions, and supernatural entities. One cannot cut away any part of it without doing violence to the literature, though this has not stopped prominent Buddhist leaders from taking such an approach.

The ideas and practices expressed in the texts reflect the beliefs of the authors and redactors, and such beliefs clearly changed, often radically, over time. The supernatural and miracles appear to have been central to this worldview. The idea that these elements are absent amongst present-day Buddhists is naive at best.

Conclusion

It's not that the quest for origins is pointless, it's not. It can be illuminating. There are, however, two major problems: (1) locating origins in the deep past is seldom possible (because, e.g. the epistemic horizon of "history" represented by the advent of writing); and (2) even when we can identify origins, they often turn out to be uninformative.

Origins can be uninformative because: there never was a perfect or idealised "original" to begin with; because of continual deliberate change over time which cannot always be traced or undone; because contemporary meaning is contemporary use; because of the reductive nature of the quest for origins and the emergent nature of the phenomena of interest; or because the methods we use reflect all manner of unexamined and untenable assumptions.

In seeking knowledge, the methods we use are important. On this I disagree with my sometime mentor Richard Gombrich who has argued that method all boils down to conjecture and refutation. While I remain grateful to Richard for his insights and generous help, I think his position on this issue is easily refuted. All too often it is lack of attention to methods that scuppers Buddhist Studies scholarship.

I do agree that the general principle of conjecture and refutation is important. In the ideal, this is what we are all doing, after all. Still, one has to pay attention to methodological issues to avoid making gross mistakes, such as treating a figure as "historical" when there are no primary sources for that figure they do not qualify to be "historical". Or applying philological methods to history as though the change of topic and media makes no difference. Human behaviour does not change in same way as human language. This ought to be a no-brainer and yet when philologists write about history, they simply ignore the methods of history. There is even a tendency to denigrate historians who insist on historical methods (with Gombrich's protege, Alex Wynne, being perhaps the worst offender).

I learned Buddhist Studies from reading hundreds of articles and dozens of books. Very few of these products of scholarship discuss methodology in a meaningful way. One of the exceptions is Jan Nattier's (2003) influential book A Few Good Men. My impression is that methodology is generally downplayed in Buddhist Studies and that religious ideas are often taken at face value.

What seems clear is that few Buddhist Studies scholars are capable of articulating basic historical methods, let alone of applying them, and as a result we see a lot of junk scholarship that can only lower the value of Buddhist Studies in academia (a dangerous situation under neoliberalism). In a sense, the field of Buddhist Studies is in the midst of an unacknowledged methodological crisis.

~~oOo~~

Bibliography

Jayarava. (2013) Evolution: Trees and Braids.

Geroulanos, Stefanos. (2024). The Invention of Prehistory: Empire, Violence, and Our Obsession with Human Origins.

Graeber, D. and Wengrow D. (2021). The Dawn of Everything.

Jones, Richard H. (2013). Analysis & the Fullness of Reality: An Introduction to Reductionism & Emergence. Jackson Square Books.

Searle, John R. (1995). The Construction of Social Reality. Penguin.